Frankfurt and Tokyo

We are pleased to announce that we have added CDN serving nodes in Frankfurt, Germany and Tokyo, Japan. This gives us increased capacity in Europe where are growing rapidly. It also improves our performance in the North Eastern Asia/Pacific region, which were previously hitting our serving nodes in Singapore.

Visual Perspective

For the curious, here is a look at what our CDN actually looks like:

You might only see 10 locations, but there are in fact 12 data centers since we have dual facilities in two regions.

- New York, New York

- Atlanta, Georgia

- Dallas, Texas

- San Francisco, California

- Vancouver, Canada

- London, United Kingdom (two facilities)

- Amsterdam, Netherlands (two facilities)

- Frankfurt, Germany

- Singapore

- Tokyo, Japan

Why Private CDN?

It occurs to me that we've not blogged about our decision to build our own private CDN. When I tell people this, especially CDN providers they must think I am crazy. That is hardly the case. You see, in the past we did use various CDN providers. Guess what? We had downtime with all of them. The worst part is the downtime was often isolated and the only way we ever noticed it was when a customer in an affected location would contact us. How embarrassing right? In fact, that wasn't the worst part – the worst part was that we couldn't do a darned thing about it! We were stuck waiting for the CDN provider to fix it.

Those experiences along with our obsession to be the fastest ad server and to absolutely guarantee 99.9% uptime are what have driven us to create our own private CDN.

I'm happy to report that the latest generation of our CDN has had 100% uptime in the last 365 days. How did we manage that? It all comes down to one thing: eliminating single points of failure at every level.

For DNS we are using Amazon Route 53 with latency based routing and multiple health checks per second on every single node. This ensures that we route you to the fastest data center, which may not necessarily be the closest one! We can also very rapidly and automatically fail over whether a single node or an entire data center goes down. It is possible for us to manually stop traffic to individual nodes as well, so we can make configuration changes or perform upgrades without impacting service.

As for the actual serving nodes we work with multiple cloud providers, which further insulates us from failure and gives us a huge bandwidth capacity of 27 Gbps!

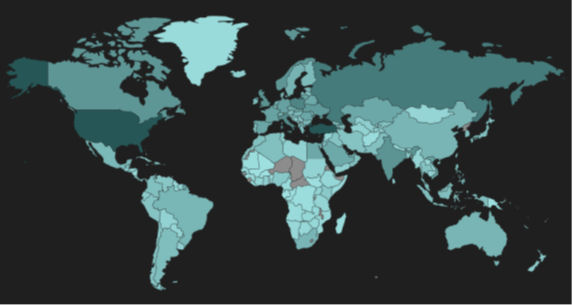

Monitoring is the other key part of the equation. We are able visually inspect the traffic to each of our data centers and individual serving nodes in real-time with Logstash, Elastic Search, MaxMind GeoIP and Kibana. For example, here is a global heat map that we have a lot of fun watching on our office wall:

As you can see there aren't many countries that we don't serve ads to, which underscores why CDN is so important. We work with a lot of news and sports sites, so we can actually see when big events are happening in different parts of the world. But, hey, this isn't just for fun. If you call us up saying your ads are loading slow we can pull up a log of your requests with your IP address. From there we can see which data center was serving you and if there's a problem we can immediately re-route requests around the problem area until it's resolved. Of course, we've yet to get any such calls because the latency based routing is extremely effective at routing around those sorts of problems. Still, it's nice to know we can take direct action when we need to because we are in complete control.

To round things out we also use Pingdom to monitor response times to each of our data centers from dozens of locations all around the world.

Making It Faster

One of the other problems we faced with CDN providers was that each region in their network would need to fetch copies of files. Furthermore they would only cache files for a brief period of time. This often resulted in slow loads, which a CDN is supposed to prevent right? Well, we thought so too. To solve this problem, when a new file is uploaded we push it to our CDN loader which then permanently replicates the file to all of our CDN nodes. Thus, every node has a copy of every file and never needs to fetch it over the network. That's pretty awesome, right? But, wait, isn't that CDN loader a single point of failure? Good question! Technically it is, yes, but the CDN nodes are designed to fetch files if they lose contact with the CDN loader for any reason. They are smart about how they do that too, trying to get a copy of the file from a close-by neighboring node instead of going all the way to the origin if they don't have to.

Future Improvements

Throughout the next year we plan to add even more locations to our CDN. We are currently considering Brazil, India, Istanbul (Turkey), Moscow (Russia) and Sydney (Australia) as our next locations. Of course, if you are in any of those locations now your ads are probably already loading fast. That is because of the many peering relationships our data centers maintain with other networks. For example, I can ping our Tokyo data center from the eastern US with an average 186ms response time. Impressive considering that is a distance of over 5,000 km! If my calculations are right that is about 1/5th the speed of light.

Closing Thoughts

Hopefully you enjoyed this write-up on our CDN if you made it this far. Operating a web application at this type of global scale is a lot of fun and hard work. The most incredible part is that anyone can tap into this infrastructure for as low as $39/month. That is the true power of the cloud right there! Not our customer yet? You should be. Get in touch with us.

Mike Cherichetti

Mike Cherichetti